Now Reading: Why data preprocessing is crucial for neural networks

-

01

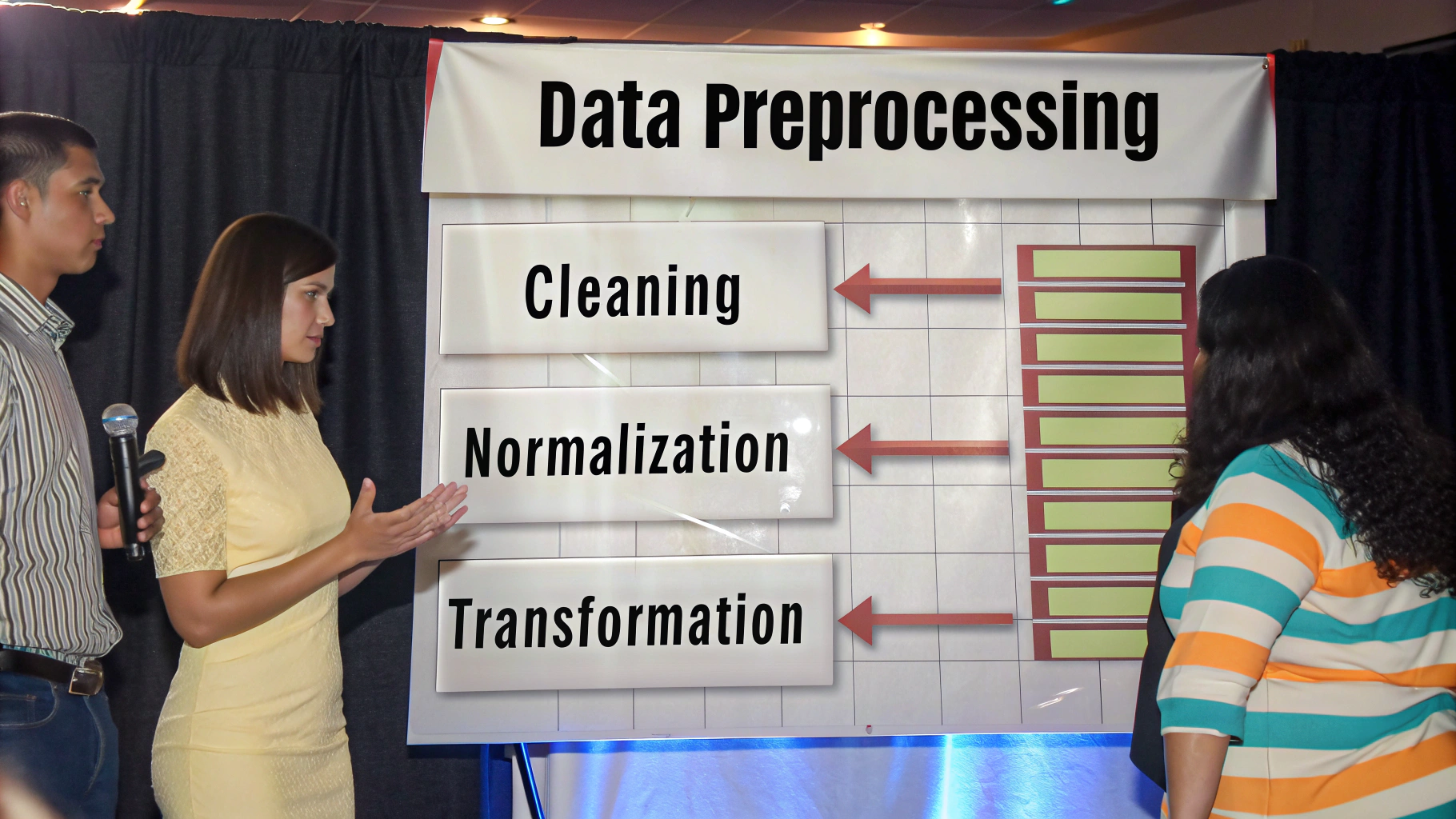

Why data preprocessing is crucial for neural networks

Why data preprocessing is crucial for neural networks

In the world of Machine Learning, and particularly with Neural Networks (NNs), the focus often lies on complex architectures, cutting-edge activation functions, and sophisticated optimization algorithms. However, a less glamorous but arguably most critical step determines the ultimate success of any model: Data Preprocessing. Neglecting this stage is like trying to build a skyscraper on a foundation of sand the structure might look impressive, but it’s destined to fail. For a compelling neural network model, the quality of its input data is paramount. The raw data, fresh from collection, is almost never in a format suitable for immediate consumption by an NN. Preprocessing transforms this raw, messy data into a clean, structured, and informative format that the network can efficiently learn from. This meticulous preparation impacts everything from training speed and convergence to model accuracy and generalization.

1. Cleaning the mess: Handling missing and noisy data

Real-world datasets are inherently flawed. They contain missing values (e.g., a blank entry in a spreadsheet) and noisy data (outliers or incorrect measurements).

- Missing data imputation: Missing entries can severely skew the results or even cause the training process to crash. Preprocessing involves techniques to fill these gaps, such as imputing the missing value with the mean, median, or mode of the feature, or using more advanced model-based imputation.

- Outlier detection and treatment: Outliers data points that significantly deviate from others can disproportionately influence the NN’s learned weights, leading to a poorly generalized model. Identifying and capping, transforming, or removing these outliers is essential for robust training.

2. Standardization and normalization: Bringing data to a common scale

This is perhaps the most fundamental and impactful step. Neural networks, especially those employing algorithms like Gradient Descent, are highly sensitive to the scale of input features. If one feature has a range from 0 to 1, and another from 100 to 100,000, the feature with the larger range will dominate the cost function and the gradient calculation.

- Normalization (Min-Max Scaling): Rescales the features to a fixed range, typically $[0, 1]$.$$X_{norm} = \frac{X – X_{min}}{X_{max} – X_{min}}$$

- Standardization (Z-Score Normalization): Rescales data to have a mean ($\mu$) of 0 and a standard deviation ($\sigma$) of 1. This is often preferred, as it handles outliers better than min-max scaling.$$X_{std} = \frac{X – \mu}{\sigma}$$

Bringing all features to a uniform scale ensures that the NN’s optimizer treats all features equally, leading to faster convergence during training and preventing saturation of activation functions (like the sigmoid function), which can cause the vanishing gradient problem.

3. Feature engineering: Maximizing signal, minimizing noise

Feature engineering is the art of creating new features or transforming existing ones to make the underlying signal more apparent to the neural network. While modern NNs, through deep layers, can learn features, hand-crafted features often significantly boost performance.

- Handling Categorical Data: NNs require numerical input. Categorical features (like “Color: Red, Blue, Green”) must be converted.

- One-Hot Encoding: Creates a binary column for each category. This is crucial for nominal data, preventing the network from imposing an arbitrary ordinal relationship.

- Label Encoding: Assigns an integer to each category, suitable for ordinal data (e.g., “Size: Small=1, Medium=2, Large=3”).

- Dimensionality Reduction: Techniques like Principal Component Analysis (PCA) reduce the number of input features while retaining most of the variance (information). This reduces the model’s complexity, combats the “curse of dimensionality,” and often improves generalization by removing noise.

4. Splitting and augmentation: Preparing for training and evaluation

The final phase of preprocessing involves structuring the data for the model life-cycle.

- Train-validation-test split: The dataset must be split into three distinct sets:

- Training set: Used to train the model.

- Validation set: Used to tune hyperparameters and prevent overfitting during training.

- Test set: Held back until the very end to provide an unbiased evaluation of the final model’s performance.

- Data augmentation: Particularly vital in Computer Vision and NLP, augmentation artificially expands the training dataset by creating slightly modified copies of existing data (e.g., rotating images, translating text). This dramatically improves the model’s ability to generalize to unseen data.

In the lifecycle of a Neural Network project, a well-preprocessed dataset is the gift that keeps on giving. It is a prerequisite for achieving optimal performance, ensuring rapid and stable training, and ultimately building a model that generalizes effectively to real-world, unseen data.

Spending 80% of project time on data preparation might sound excessive, but it’s a widely accepted adage in the field. When the training phase inevitably presents challenges poor accuracy, slow convergence, or instability the first place a savvy practitioner looks is not the learning rate or the architecture, but back to the data. A clean, scaled, and well-engineered dataset transforms a frustrating, high-variance learning process into a stable, efficient, and successful one. Data preprocessing is not just a step; it is the foundation of high-performance deep learning.