For the love of AI.

I still remember the first time I watched an AI do something that felt almost… human. It wasn’t a grand moment just a small program predicting the next word I would type but it felt like watching a spark come alive. That tiny spark grew into fascination, and fascination turned into a kind of love. Not the kind meant for people, but the kind you feel for ideas that change you. As the years passed, AI became more than lines of code; it became a companion in creativity, a partner in problem-solving, a window into a future that suddenly felt closer than ever. Every new breakthrough felt like discovering a hidden chapter in a story I didn’t know I was part of. And somewhere along the way, I realized this wasn’t just interest anymore this was passion. This was falling in love with the endless possibilities of AI, one discovery at a time.

The Darthmouth conference (1956): The birthplace of Artificial Intelligence

If there is one moment in history that we can call the official birth of Artificial Intelligence, it is the 1956 Darthmouth conference.

Held at Darthmouth College in New Hampshire, USA, this summer workshop was not just a meeting it was an idea that changed the world. The proposal for the conference was written by four visionaries: John McCarthy, Marvin Minsky, Claude Shannon, and Nathaniel Rochester.

They believed something revolutionary that human intelligence could be recreated in machines.

In their proposal, they wrote something bold and historic:

Every aspect of learning or any other feature of intelligence can in principle be so precisely described that a machine can be made to simulate it.

This was the first time the term “Artificial Intelligence” was officially used. It wasn’t about machines that could compute faster or solve equations.

Neurons and AI

In 1943, something remarkable happened long before computers became powerful or the term “artificial intelligence” was even imagined. Two researchers, Warren McCulloch and Walter Pitts, published a simple yet groundbreaking idea: what if the human brain could be expressed through mathematics?

They proposed that neurons, those tiny structures firing inside our minds could be seen as logical switches, turning on and off just like circuits. With this idea, they unknowingly laid the foundation for what we now call neural networks. Their work hinted at a future where machines might learn, recognize patterns, and even think in ways that resemble the human brain. What started as a theoretical model transformed into the spark that inspired decades of AI research, eventually shaping the technology that powers modern intelligent systems today.

Alan Turing

In 1950, the world witnessed one of the most defining moments in AI history when Alan Turing introduced the idea of machine intelligence not through hardware, but through a deeply philosophical question: “Can machines think?”

Turing didn’t try to answer that directly. Instead, he created a practical way of testing intelligence, later known as the Turing Test. Imagine a situation: a human interacts with two hidden participants one human and one machine only through written messages. If the human judge cannot reliably identify which is the machine, then the machine can be said to be “thinking.” For its time, this was revolutionary. Computers didn’t talk, didn’t learn, and barely even processed language, yet Turing envisioned a future where they could mimic human reasoning.

What made this idea powerful wasn’t just the test it was the bold belief behind it. Turing predicted things that sounded impossible then: learning machines, knowledge storage, self-improving algorithms. He imagined computers that could rewrite their own logic essentially the earliest spark of today’s machine learning.

So, 1950 wasn’t just a year it became a turning point. The Turing Test didn’t give us intelligent machines immediately, but it gave humans a measurable goal. It bridged imagination and technology, shifted intelligence from something mysterious into something measurable, and opened the door to what we today call “AI.”

SHRDLU.

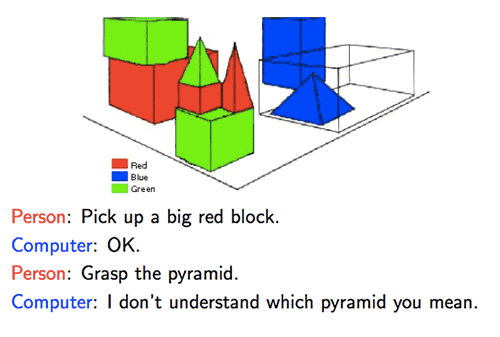

In the early 1970s, we got the first real glimpse of computers understanding human language not just responding with fixed answers, but actually interpreting meaning. This breakthrough came through a system named SHRDLU, created by Terry Winograd at MIT.

SHRDLU wasn’t just a chatbot it was a remarkable early example of Natural Language AI. It lived inside a simple virtual world made of blocks: cubes, pyramids, and rectangles of different colors. Users could type sentences like:

“Move the red block onto the blue cube.”

“What is on the table?”

“Why did you pick up the pyramid?”

And SHRDLU would respond intelligently and accurately.

What made this powerful was not the graphics but the understanding. SHRDLU could remember previous actions, understand pronouns, and follow instructions that depended on context:

If you said, “Put it inside the box,” it knew what “it” referred to.

If you asked, “What did you do before that?” it could explain its actions.

For the first time, a computer didn’t just respond it understood human language within a defined world. People interacting with it felt like the machine was reasoning, not just executing commands.

The world of SHRDLU was small just blocks but it proved something huge: language could be processed logically. It planted the seeds for future natural language systems, from digital assistants to today’s AI models.

In the 1970s, SHRDLU showed that machines could understand instructions, maintain memory, answer questions, and explain themselves making it the earliest true milestone of Natural Language AI.

The First AI that beat a world champion

In 1997, history was made when IBM’s supercomputer Deep Blue defeated world chess champion Garry Kasparov. This wasn’t just a win in a game it symbolized a shift in how humanity viewed machine intelligence. Kasparov was considered unbeatable. He had dominated world chess with unmatched strategic depth and intuition. But Deep Blue came prepared not with intuition, but with raw computational power and advanced algorithms.

Deep Blue could evaluate up to 200 million chess positions per second. While a human thought in patterns and instinct, the machine relied on sheer calculation, opening book strategies, and deep positional analysis. The first big match took place in 1996, where Kasparov actually won. He dismissed the computer as predictable. But IBM upgraded Deep Blue improving its processing power and fine-tuning its chess logic.

For the first time, a machine defeated the strongest mind on Earth in an intellectual game. It wasn’t luck it was capability.

In the early 1950s, long before modern AI could generate images or hold conversations, a groundbreaking milestone quietly unfolded in the world of games. Between 1951 and 1952, computer scientist Arthur Samuel created one of the world’s first AI programs: a Checkers-playing machine. This wasn’t just a simple rule-based program it learned. Samuel’s Checkers AI improved its gameplay over time by studying past moves and outcomes, marking the birth of machine learning in games. It was the first real demonstration that a computer could not only follow instructions but also get better with experience, transforming a classic board game into a historic experiment that paved the way for all future game AIs, from chess engines to modern reinforcement-learning systems.